A/B Testing in Machine Learning – Best Practices & FAQ

A/B testing is a powerful method for improving digital experiences and optimizing business performance. This FAQ guide explores the fundamentals of A/B testing, its applications in machine learning, and how it can help organizations make data-driven decisions.

Reinforcement learning is the problem of getting an agent (i.e., software) to act in the world (i.e., environment), so as to maximize its rewards, often using a policy to pick from a set of actions based on contextual information. For example, consider teaching a robot how to accomplish a task: you cannot tell it what to do, but you can reward/punish it for doing the right/wrong thing. Eventually, it has to figure out what it did that made it receive the reward/punishment. Similarly, we can use such methods to train learning machines to do multiple tasks, such as playing backgammon, chess, Atari, scheduling jobs, and even helicopter flight simulations. More specifically, this can be done in the context of web analytics by conducting A/B testing automatically.

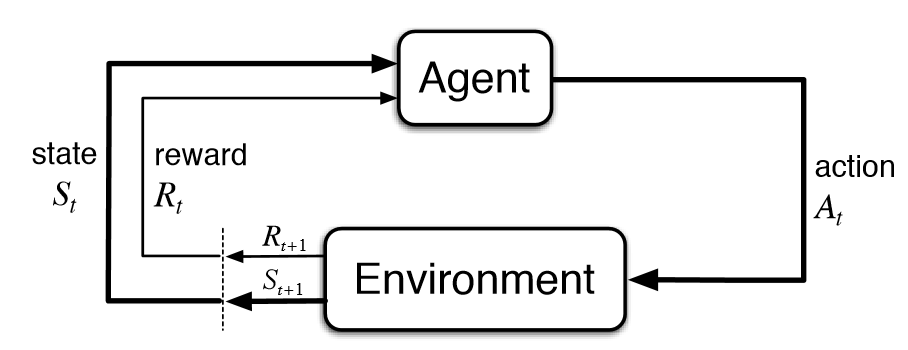

Reinforcement Learning is a type of Machine Learning, and thereby also a branch of Artificial Intelligence. It allows machines and software agents to automatically determine an ideal behavior within a specific context, in order to maximize its performance. Simple reward feedback is required for the agent to learn its behavior – this is known as the reinforcement signal. The key to understanding when to use Reinforcement Learning is when data for learning currently does not exist, or it is impractical to wait to accumulate it or else the data may change rapidly causing the outcome to change more rapidly. Simply put, the Reinforcement Learning algorithm constructs its own data through experience and by determining the ‘champion policy’ through trial and error, and temporal learning. The following diagram illustrates the basic concept underlying the Reinforcement Learning method:

- The agent and the environment interact at each a sequence of discrete time steps, t = 0,1,2,3…

- At each time step the agent receives some representation of the environment’s state, Sₜ ∈ S, where S is the set of possible states

- On that basis, the agent selects an action Aₜ ∈ A (Sₜ)

where A(Sₜ) is the set of actions available in state Sₜ

- Step later as a consequence of its actions the agent receives a numerical reward Rₜ+1 ∈ R and finds itself in a new state S ₜ +1. The job is to maximize cumulative reward

- In such a sequential decision process, the goal is to select an action-value function to maximize a discounted sum of future rewards

A/B testing refers to randomized experiments with subjects randomly partitioned amongst treatments. A more advanced version, ‘multi-variate testing’, runs many A/B tests in parallel. In such website design setting, a set of possible layout options constructs the environment while changing the layout is defined as an action and increased CTR acts as a reward. Ultimately, the policy is to pick the ideal layout to optimize CTR.

It should be noted that the Reinforcement Learning area is an active research domain in the machine learning scholar community. As data for web analytics becomes a valuable resource to enterprises, the marriage with new and evolving artificial intelligence algorithms with web site optimization methodologies holds a big promise for digital marketers.

Dr. Elan Sasson. Intlock LTD

You might also be interested in Kissmetrics vs Mixpanel Tracking Real Funnels.

FAQs about A/B Testing

What is A/B testing in machine learning?

A/B testing, also known as a controlled experiment, is a method used to compare two versions—A and B—to determine which one performs better on specific metrics. In machine learning, it helps test models, algorithms, or configurations in real-world environments to identify the best solution based on user interaction outcomes.

How is A/B testing used in practice?

A/B testing is widely applied to evaluate the performance of different versions of a model, product feature, or user interface. Organizations use A/B testing to:

- Optimize marketing strategies

- Improve website layouts and user journeys

- Enhance machine learning models

- Increase user engagement and conversion rates

What is the difference between A/B testing and usability testing?

Although both methods evaluate user interaction, their goals differ:

- A/B Testing: Measures which version performs better using quantitative data, like click-through rates or conversions.

- Usability Testing: Gathers qualitative data to understand why users behave a certain way through observation and feedback.

Pro Tip: Combining both methods can provide a more comprehensive view of user behavior.

Read more about improving user engagement.

What makes A/B testing essential for machine learning?

A/B testing bridges the gap between theoretical model performance and real-world user interactions. It ensures that changes made to models or features are effective in practical scenarios by providing measurable, data-driven insights.

Benefits include:

✔️ Improved accuracy of models

✔️ Reduced risks of deploying underperforming solutions

✔️ Enhanced user satisfaction and business outcomes

See how CardioLog Analytics delivers actionable insights.

When should A/B testing be used?

A/B testing is best used in scenarios where:

- You need to compare two versions of a feature under identical conditions.

- User interactions can be measured.

- There is enough data or traffic to ensure statistically significant results.

Example: Testing a new SharePoint layout to see if it improves engagement.

When might A/B testing not be appropriate?

Consider alternatives to A/B testing in situations where:

- Multiple variables need to be tested simultaneously (use multivariate testing instead).

- There’s insufficient traffic to achieve statistically significant results.

- Ethical concerns prevent exposing users to certain variations.

How do A/B tests differ from multivariate tests?

- A/B Testing: Compares two versions to identify the better performer.

- Multivariate Testing: Tests multiple variables simultaneously to understand how different elements interact.

Multivariate testing provides deeper insights but requires larger sample sizes to ensure accurate results.

What are the key metrics commonly analyzed in A/B testing?

Metrics depend on the specific objectives of the test. Commonly analyzed metrics include:

- Conversion Rate: Percentage of users taking a desired action (e.g., filling out a form).

- Click-through Rate (CTR): Percentage of users clicking a specific link or ad.

- Engagement Time: How long users spend on a page or app.

- Revenue Per Visitor (RPV): Average revenue generated per visit.

- Error Rate: Frequency of errors encountered during interactions.

How can you determine the sample size required for an A/B test?

Calculating the sample size involves:

- The significance level (typically 0.05)

- The statistical power (commonly 80% or higher)

- The expected effect size (minimum detectable difference)

Using online calculators or statistical tools can help ensure accurate sample size estimates.

💡 Ready to optimize your SharePoint and Microsoft 365 experience?

Schedule a personalized demo with our experts to see how CardioLog Analytics can enhance user engagement, boost adoption, and drive business productivity.

🔗 Request a Demo | 📧 Contact Us for More Information

Related Topic:

Follow @cardiolog

Follow @cardiolog